Meta is presently working many facts amenities with GPU practising clusters the realm over. Our facts amenities are the backbone of our operations, meticulously designed to enhance the scaling demands of compute and storage. A yr previously, on the different hand, because the industry reached a severe inflection point attributable to the rise of man-made intelligence (AI), we acknowledged that to e book in the generative AI remark we’d want to transform our mercurial.

Our increased focal point on AI turned into as soon as driven every by its rise in using industry outcomes and the massive jabber in these develop of workloads’ computational wants. As well to to wider exercise of outmoded AI for issues fancy ad concentrated on, now we get also viewed rising numbers of gargantuan generative AI objects that mimic nearly-human intelligence in everything from human verbal interplay to the creation of photos and completely different media. And these develop of objects are massive, with trillions of practising parameters, and to put together them we want tall sources.

In this process, we’ve constructed one of many world’s greatest AI practising infrastructures, and it has been rising exponentially over the closing years. Meta’s practising infrastructure comprises dozens of AI clusters of varying sizes, with a opinion to scale to 600,000 GPUs in the following yr. It runs hundreds of practising jobs daily from many of of completely different Meta teams. Coaching jobs characteristics vary severely too. They’ll also merely additionally be as minute as a single GPU working for a couple minutes, while generative AI jobs can get trillions of parameters and in overall span hundreds of hosts that want to work together and are very sensitive to interruptions. As well to to that, practising jobs are tied essential closer to the hardware, and that hardware varies severely. Meta runs completely different types of backend networks, topologies, and practising jobs that get tight dependencies between tool and hardware components.

This transition has no longer been with out its challenges. We had to reconfigure the mercurial with out disrupting our hypergrowth, a role equivalent to rebuilding an airplane mid-flight. This pushed us to innovate and collaborate with distributors and utility corporations to develop a supportive ecosystem. In this weblog we can focus on very top such a transformations. We can picture how Meta is sustaining these practising clusters and what sets us besides the frequent AI atmosphere. And what can we imply by sustaining? On the total, to any extent additional or less operation that updates or verifies tool and firmware components in the clusters, including the networking route.

The first characteristics of GPU practising

GPU practising has some annoying characteristics:

- Ability guarantees: While some practising jobs can even merely additionally be paused, loads of Meta jobs are time-severe and habitual or online. This means we can’t take gargantuan amounts of capability on a default foundation.

- Infamous hosts are very execrable: Since many jobs require all hosts to be synchronized, execrable hosts that are a limited slower, get some non-deadly hardware, or get networking points are extraordinarily unfavorable.

- Low interruption rate: Since many hosts work with every completely different on a shared anguish, AI practising jobs are sensitive to interruptions.

- Rollout security: The AI tool stack is deep, and complications are in overall annoying to pinpoint, so we want to be careful when rolling out new components.

- Host consistency: AI practising jobs are in identical old gruesome-host, and while outside of the CUDA model there are continuously ever annoying incompatibilities, now we get realized that cluster consistency is highly necessary for debugging and SEV avoidance.

What’s special about Meta’s GPU practising?

Meta uses bespoke practising hardware with the most modern chips doable and high-performance backend networks that are highly tempo optimized. We also strive and care for as current and flexible as doable with the tool stack; in the occasion of firmware upgrades, this allows us to exercise new capabilities or minimize failure charges.

Collectively this methodology now we get more than:

- 30 upkeep operations

- 50 completely different components that are updated

- Three completely different host-verification initiatives to guarantee optimal performance and balance

- Hundreds of disruptive AI host initiatives daily

And we want to enact them safely, while guaranteeing capability. In any case, our practising clusters are also used flexibly to bustle a gigantic number of workloads, from single-host to among the greatest practising jobs on this planet, and from offline initiatives to jobs that want to be up and working 24/7.

Given the number of upgrades, now we get a gargantuan amount of overlapping inflight changes at any given time, including some that are continuously being applied, corresponding to verification initiatives. Accepting this presents Meta the flexibleness we want in the utilization of cutting-edge hardware, scaling our infrastructure, and the utilization of every in flexible ways. In smaller environments it’s in overall doable to preserve clusters in a consistent remark and upgrade the total cluster and all of its firmware and equipment components in the a similar upkeep window. Doing this in a gargantuan, diverse atmosphere fancy Meta, on the different hand, would introduce massive dangers and be operationally infeasible. In its set, we guarantee components are acceptable with every completely different and roll ingredient upgrades up in a sliding style. This form also permits us to guarantee capability availability.

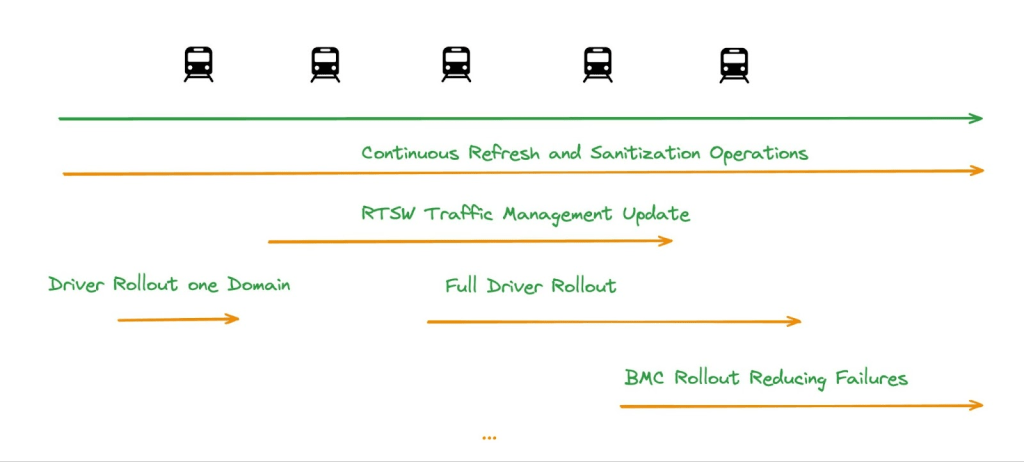

Upkeep trains

Outdoors of special cases, Meta maintains its mercurial of clusters the utilization of a strategy known as upkeep trains. That is used for all capability, including compute and storage capability. A minute different of servers are taken out of manufacturing and maintained with all relevant upgrades. Trains present the allege that every person capability minus one upkeep domain is up and working 24/7, thus offering capability predictability. That is main for all capability that is used for online and habitual practising.

Upkeep trains obtain any new upgrade and allege a elephantine-talk over with cycle in a assured timeframe. Longer-working upgrades can get lower rollout guarantees and would possibly perhaps perhaps well even be scheduled to be applied in more than one cycles. So that you just potentially can even get many overlapping upgrades, and, if beneficial, upgrades can even merely additionally be aligned.

For AI capability, now we get optimized domains that enable for completely different types of AI capability, very strict SLOs, and a contract with products and services that permits them to keep some distance from upkeep-put together interruptions, if doable.

Late rollouts

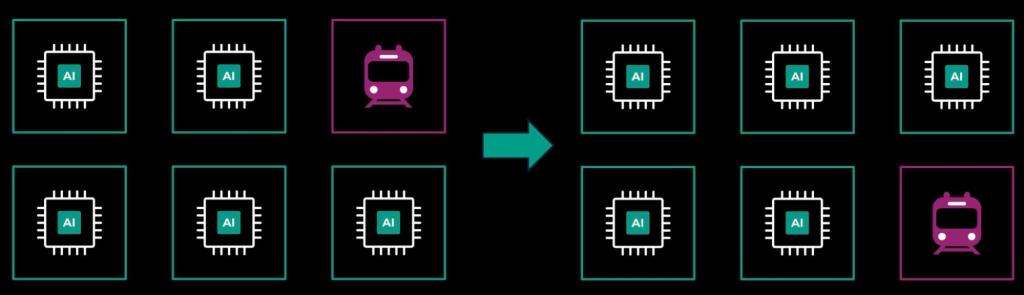

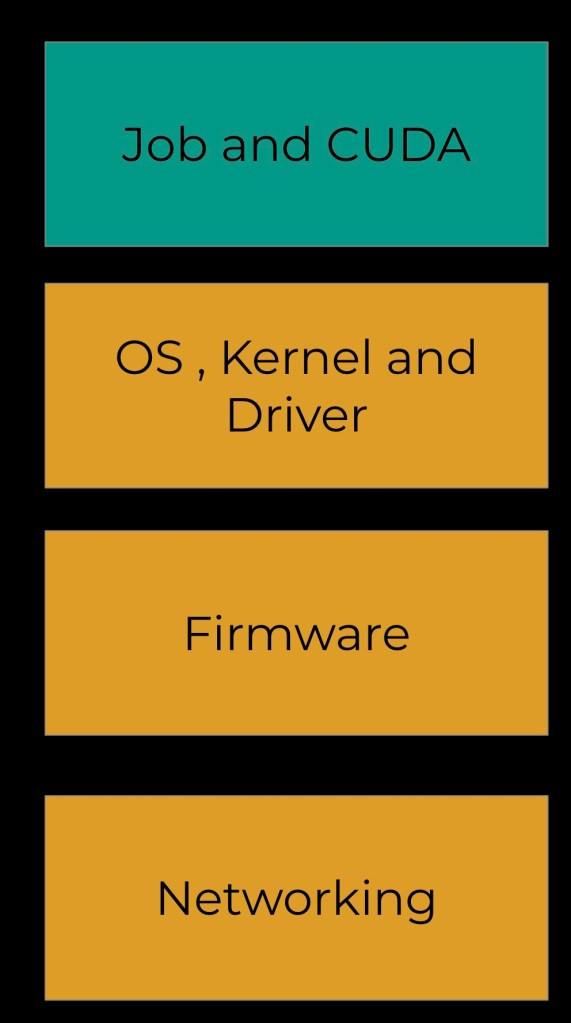

As a outcome of the scale of our infrastructure, we had to substantiate that every person disruptive rollouts outside of special cases happen in a slack style. This means completely different servers in a cluster can bustle a particular host stack for a brief timeframe. That is sort of identical old in outmoded capability however bright in AI practising, since AI jobs are very intently tied to the hardware.

At Meta, we’ve ensured that jobs get a consistent stack however upgrade lower-level components in a slack style. Unlike this, the AI job itself, which comprises the CUDA library, is continuously consistent. This distinction is severe because lower-level components in overall require hours to put in and configure or require rebooting the host, while bigger-level components in the job container itself can even merely additionally be restarted fluidly.

This sounds easy, however thanks to the tight integration of AI with hardware, now we get main to enact loads of pattern, including careful checking out on all lower ranges, special monitoring, and tight work with distributors.

By and gargantuan, this has been very worthwhile. The AI stack in identical old has matured loads over the last three years. We also added tooling for uncommon compatibility-breaking upgrades.

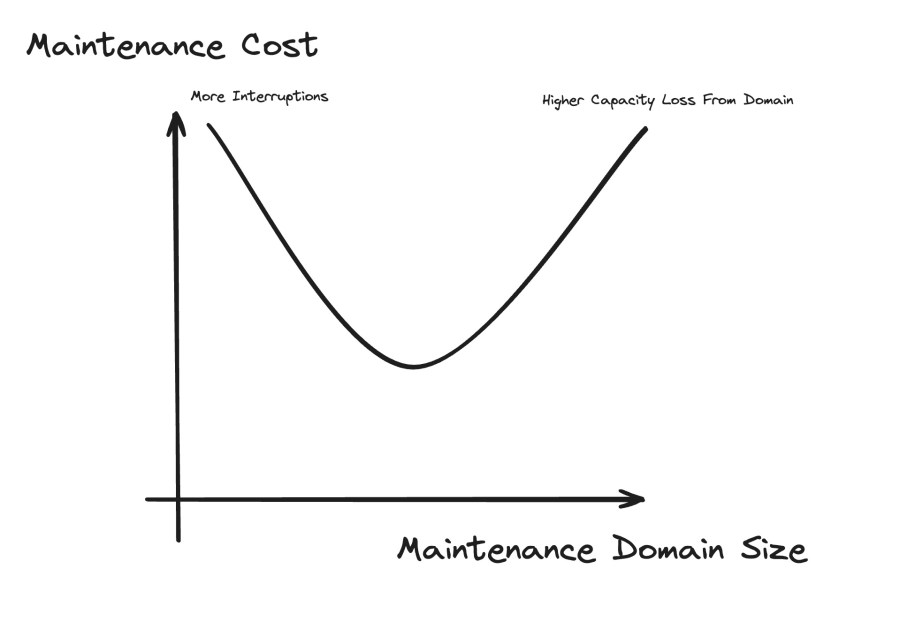

Deciding on the moral upkeep domains

One manner to guarantee optimal AI performance turned into as soon as to work with AI teams to make the optimal measurement of upkeep domains. A upkeep domain is the percentage of capability we take down in a single skedaddle, and deciding on the optimal measurement is a operate of every the worth of interruptions and the capability that is misplaced for the length of the upkeep length. Since interruption prices are high for AI jobs, optimizing this relationship allowed us to severely minimize the upkeep overhead for AI capability.

OpsPlanner: Meta disruptive-work orchestrator

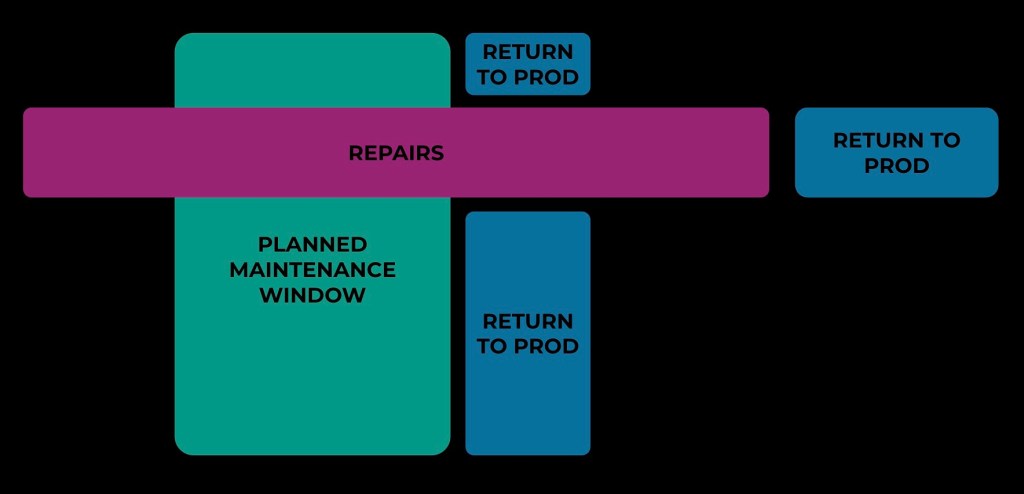

Extreme to AI capability are the consistency necessities. As an illustration, whenever you happen to fancy to pray to pass to a brand new CUDA model, you potentially can even want all of the capability on a brand new driver model. This becomes truly sophisticated in an environment with hundreds of hosts and a full bunch planned and unplanned operations that can even merely overlap with every completely different. To enact this safely and allege hosts get the moral upgrades applied sooner than entering manufacturing, Meta has unified them in the OpsPlanner work orchestrator. No longer very top can it work on overlapping scopes of operations and properly serialize them, it also takes them safely out and into manufacturing. As well to, it has a constructed-in handover drift that ensures moral escalation habits and avoids overlaps and deadlocks. OpsPlanner can even guarantee upgrades are applied to hosts sooner than they are returned to manufacturing. And OpsPlanner owns planned upkeep and failure buffers and safeguards them. Furthermore, it’s highly efficient and efficient: OpsPlanner presently handles 1,000,000 operations per day.

Security and failure scenarios

Meta has a deep stack of security capabilities that entails:

- Autostop of upkeep trains if upkeep or failure buffers are exhausted;

- Computerized offboarding of failing upgrades; and

- Rollout phases for upgrades, in suppose that very top properly-examined changes attain global programs.

If one thing does skedaddle inappropriate, on the different hand, we can react fleet, looking on the main repair, with emergency trains, gargantuan-scale upkeep for breaking upgrades, and more.

Rapid fascinating to the manner forward for generative AI

At Meta, we predict about in fascinating fleet and discovering out by doing. Snappy innovation is in our ethos. That is what essentially formed our fling as we continuously innovated towards building the foundational infrastructure that makes us leaders in generative AI. We can remain dedicated to constructing applied sciences that no longer very top revenue Meta however also get a particular affect on society as a full.

As we pass forward, we invite you to affix us on this fling. Collectively, we can shape a future where AI is no longer appropriate a tool however a power for moral, remodeling industries, empowering people, and constructing a more sustainable world.

The best is but to come all any other time, and we are excited to pioneer the following day’s potentialities in generative AI.