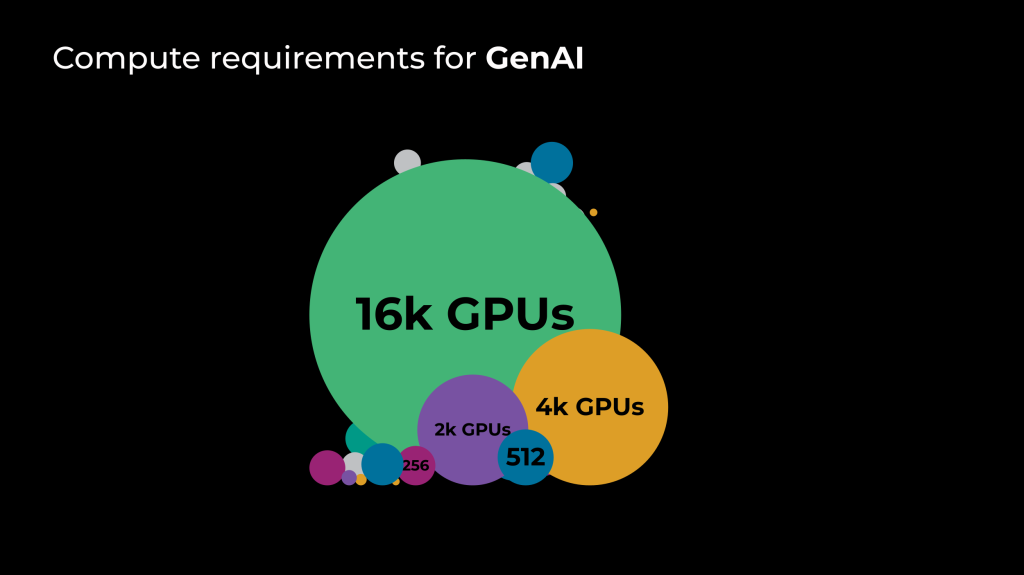

As we proceed to focal level our AI compare and pattern on fixing increasingly more advanced considerations, one in every of the main and no longer easy shifts we’ve skilled is the sheer scale of computation required to coach great language models (LLMs).

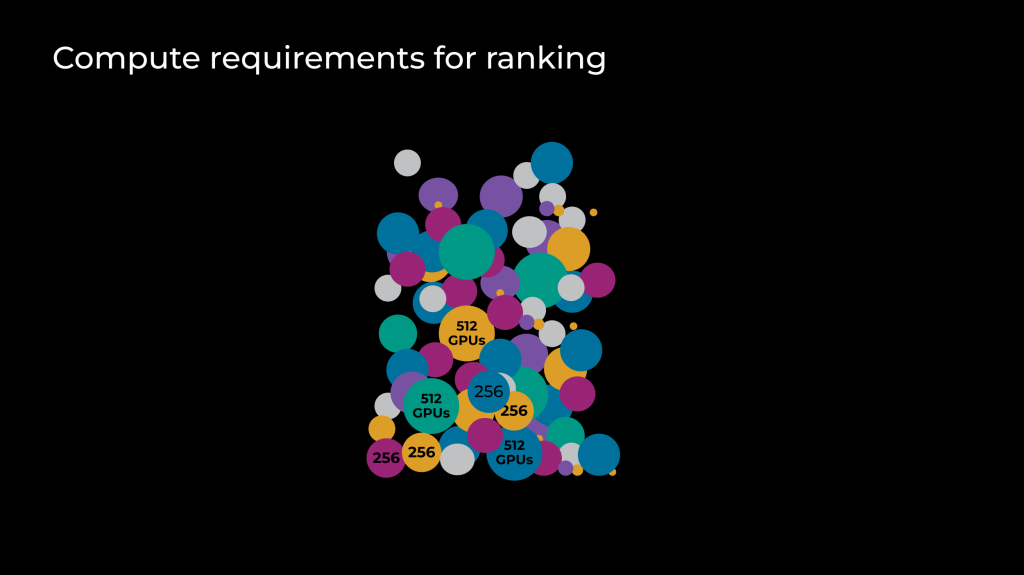

Historically, our AI model practicing has involved a practicing broad collection of models that required a relatively smaller collection of GPUs. This used to be the case for our advice models (e.g., our feed and ranking models) that can perhaps presumably perhaps ingest gigantic amounts of knowledge to make felony recommendations that strength most of our merchandise.

With the appearance of generative AI (GenAI), we’ve considered a shift in direction of fewer jobs, but extremely great ones. Supporting GenAI at scale has supposed rethinking how our instrument, hardware, and network infrastructure come collectively.

The challenges of great-scale model practicing

As we expand the gathering of GPUs in a job, the possibility of an interruption attributable to a hardware failure also increases. Also, all of these GPUs quiet must keep up a correspondence on the the same excessive-scramble cloth to fabricate optimally. This underscores the importance of four elements:

- Hardware reliability: Making certain that our hardware is first rate is the largest. We want to decrease the potentialities of a hardware failure interrupting a practicing job. This entails rigorous testing and quality retain watch over measures, and automation to quick detect and remediate elements.

- Hasty restoration on failure: Despite our ideal efforts, hardware mess ups can and assign happen. After they attain, we possess so that you might maybe well win higher quick. This entails reducing re-scheduling overhead and posthaste practicing re-initialization.

- Efficient preservation of the practicing articulate: Within the match of a failure, we possess so that you might maybe well receive the assign we left off. This means we would favor to over and over checkpoint our practicing articulate and efficiently store and retrieve practicing records.

- Optimal connectivity between GPUs: Gigantic-scale model practicing entails transferring gigantic amounts of knowledge between GPUs in a synchronized fashion. A unhurried records alternate between a subset of GPUs can compound and unhurried down the whole job. Fixing this mission requires a magnificent and excessive-scramble network infrastructure in addition to as atmosphere pleasant records transfer protocols and algorithms.

Innovating all around the infrastructure stack

Perfecting each and every layer of our infrastructure stack is the largest attributable to the calls for of GenAI at scale. This has encompassed developments in a gigantic collection of areas.

Coaching instrument

We enable researchers to use PyTorch and other original initiate provide developments, facilitating extremely posthaste compare-to-production pattern. This entails increasing original algorithms and methods for atmosphere pleasant great-scale practicing and integrating original instrument tools and frameworks into our infrastructure.

Scheduling

Efficient scheduling helps be sure that our resources are broken-down optimally. This entails refined algorithms that can perhaps presumably allocate resources per the wants of different jobs and dynamic scheduling to adapt to changing workloads.

Hardware

We want excessive-efficiency hardware to address the computational calls for of great-scale model practicing. Previous dimension and scale, many hardware configurations and attributes want to be ideal optimized for GenAI. On condition that hardware pattern instances are traditionally lengthy, we had to adapt reward hardware, and to this stop we explored varied dimensions alongside with strength, HBM skill and scramble, and I/O.

We also pivoted by modifying the Worthy Teton platform that used to be developed the utilization of NVIDIA H100 GPUs, increased the TDP of the GPUs to 700W, and moved to HBM3 on the GPUs. Since we did no longer possess time to alternate the cooling infrastructure, we had to live in an air-cooled atmosphere. The mechanical and thermal designs had to alternate to accommodate this, and that introduced about a validation cycle to give a enhance to a good-scale deployment.

All of these hardware-linked modifications were no longer easy because of this of we had to glean a resolution that fit internal the reward useful resource constraints, with a extremely cramped diploma of freedom to alternate and meet a tight agenda.

Recordsdata heart deployment

When we’ve chosen a GPU and device, the project of putting them in a records heart for optimal utilization of resources (strength, cooling, networking, etc.) requires revisiting alternate-offs made for other forms of workloads. Recordsdata heart strength and cooling infrastructure can’t be changed quick (or without considerations) and we had to glean an optimal structure that allowed most compute functionality within a records hall. This required relocating supporting companies similar to readers out of the records hall and packing as many GPU racks as attainable to maximise the flexibility and network functionality for absolute top compute density with the ideal network cluster.

Reliability

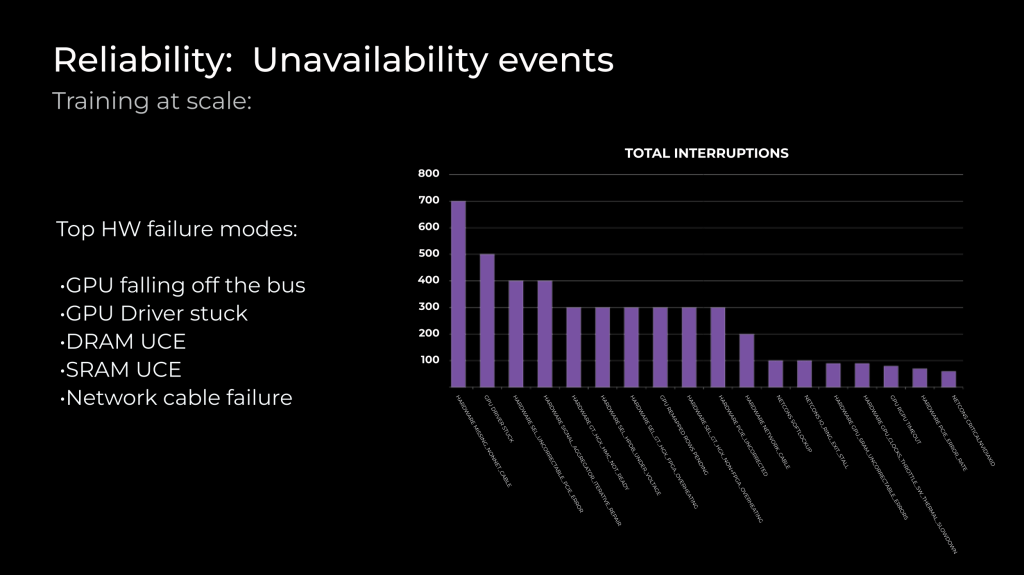

We want to situation for detection and remediation to decrease downtime within the course of hardware mess ups. The collection of mess ups scales with the dimension of the cluster, and having a job that spans the cluster makes it the largest to retain passable spare skill to restart the job as rapidly as attainable. Besides, we video display mess ups and might maybe maybe presumably perhaps also each and every so over and over rob preventive measures to mitigate downtime.

A few of the most frequent failure modes we possess seen are:

- GPUs falling off: On this case, GPUs are no longer detected by the host on PCIe. There are diverse causes for this failure, but this failure mode is considered more within the early existence and settles as the server ages.

- DRAM & SRAM UCE: Uncorrectable errors are frequent in recollections, and we video display and establish repeat offenders, be conscious in opposition to thresholds, and provoke RMAs when error rates exceed vendor thresholds.

- HW network cable: Within the final category of unreachable servers, these mess ups are also considered most typically within the early lifetime of the server.

Community

Gigantic-scale model practicing entails transferring gigantic amounts of knowledge quick between GPUs. This requires robust and excessive-scramble network infrastructure in addition to as atmosphere pleasant records transfer protocols and algorithms.

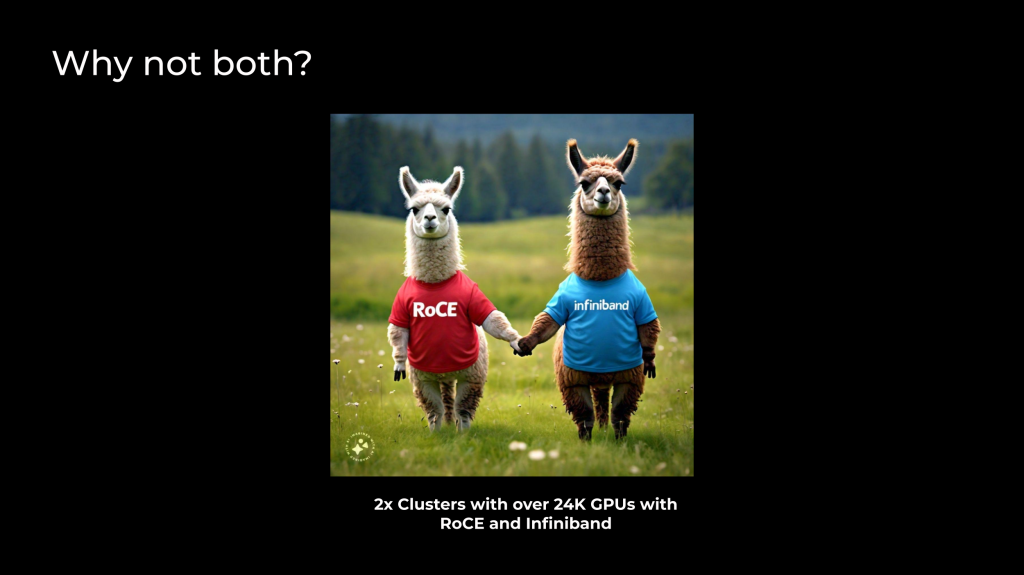

There are two leading selections within the industry that fit these requirements: RoCE and InfiniBand materials. Both of these recommendations had tradeoffs. On the one hand, Meta had constructed RoCE clusters for the past four years, but the ideal of these clusters ideal supported 4K GPUs. We wanted drastically bigger RoCE clusters. On the opposite hand, Meta had constructed compare clusters with InfiniBand as great as 16K GPUs. Alternatively, these clusters were no longer tightly integrated into Meta’s production atmosphere, nor were they constructed for the most modern era of GPUs/networking. This made for a advanced decision of what cloth to create with.

So we determined to create both: two 24k clusters, one with RoCE and one other with InfiniBand. Our intent used to be to create and learn from the operational expertise. These learnings will list the lengthy flee direction of GenAI materials. We optimized the RoCE cluster for like a flash create time, and the InfiniBand cluster for stout-bisection bandwidth. We broken-down both InfiniBand and RoCE clusters to coach Llama 3, with the RoCE cluster broken-down for practicing the ideal model. Despite the underlying network expertise variations between these clusters, we were ready to tune both of them to provide identical efficiency for these great GenAI workloads

We optimized three aspects of the final stack to make network communication for GenAI models performant on both clusters:

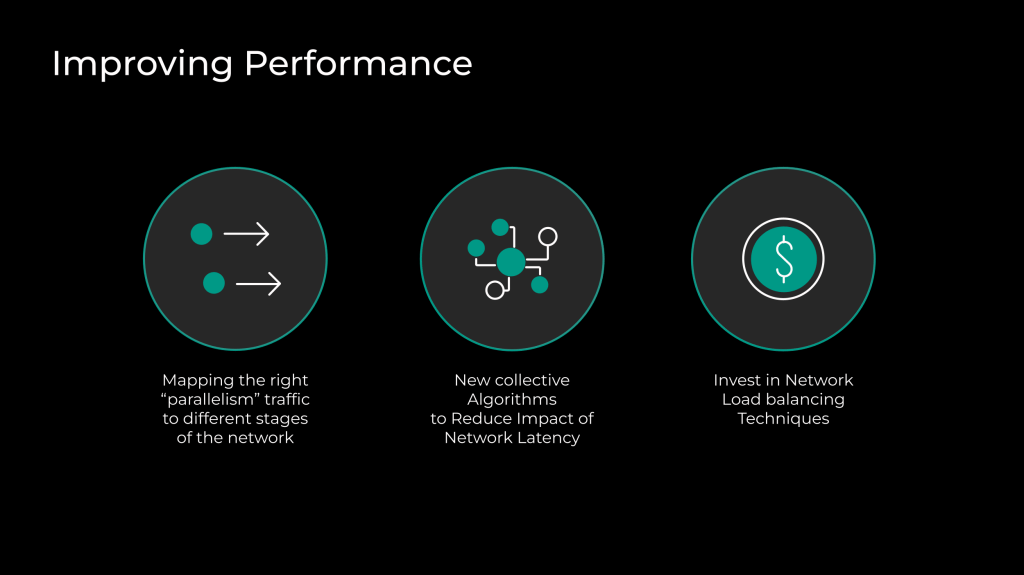

- We assigned communication patterns because of this of different model, records and pipeline parallelisms to different layers of the network topology so that the network capabilities were effectively exploited.

- We implemented collective communication patterns with network topology awareness so that they would perhaps presumably perhaps also additionally be much less latency-tranquil. We attain this by changing the default implementation of collectives with custom algorithms similar to recursive doubling or halving as a change of extinct algorithms like rings.

- Correct like ranking jobs, GenAI jobs hang additional beefy flows that make it no longer easy to distribute traffic all over all attainable network paths. This required us to further make investments in network load balancing and routing to make an optimal distribution of traffic all over network resources.

We spoke intensive about our RoCE load-balancing methods at Networking @Scale 2023.

Storage

We want atmosphere pleasant records-storage recommendations to store the gigantic amounts of knowledge broken-down in model practicing. This entails investing in excessive-skill and excessive-scramble storage applied sciences and increasing original records-storage recommendations for particular workloads.

Having a explore forward

Within the following couple of years we will likely be working with a whole bunch of hundreds of GPUs, handling even bigger volumes of knowledge, and dealing with longer distances and latencies. We’ll be adopting original hardware applied sciences—alongside with more moderen GPU architectures—and evolving our infrastructure.

These challenges will push us to innovate and adapt in ways we can’t fully predict but. Nonetheless one ingredient is dash: We are ideal originally of this scoot. As we proceed to navigate the evolving panorama of AI, we live committed to pushing the boundaries of what’s attainable.